2. Connect to AzureSQL

This tutorial is a part of the series - Guided set up.

Destinations

Ingested data is written to a destination. Data can be consumed from one of the destinations.

ELT Data writes the ingested data to:

- your cloud storage (AWS S3, Azure Blob, GCS) and

- (optional) to one of the DBs selected by you.

In this tutorial we will be writing the data to Azure Blob and AzureSQL.

Create a new destination connection

During onboarding we help you set up the cloud storage. The cloud storage location can be specified while configuring pipelines. Users need not explicitly set up the connection to their cloud storage

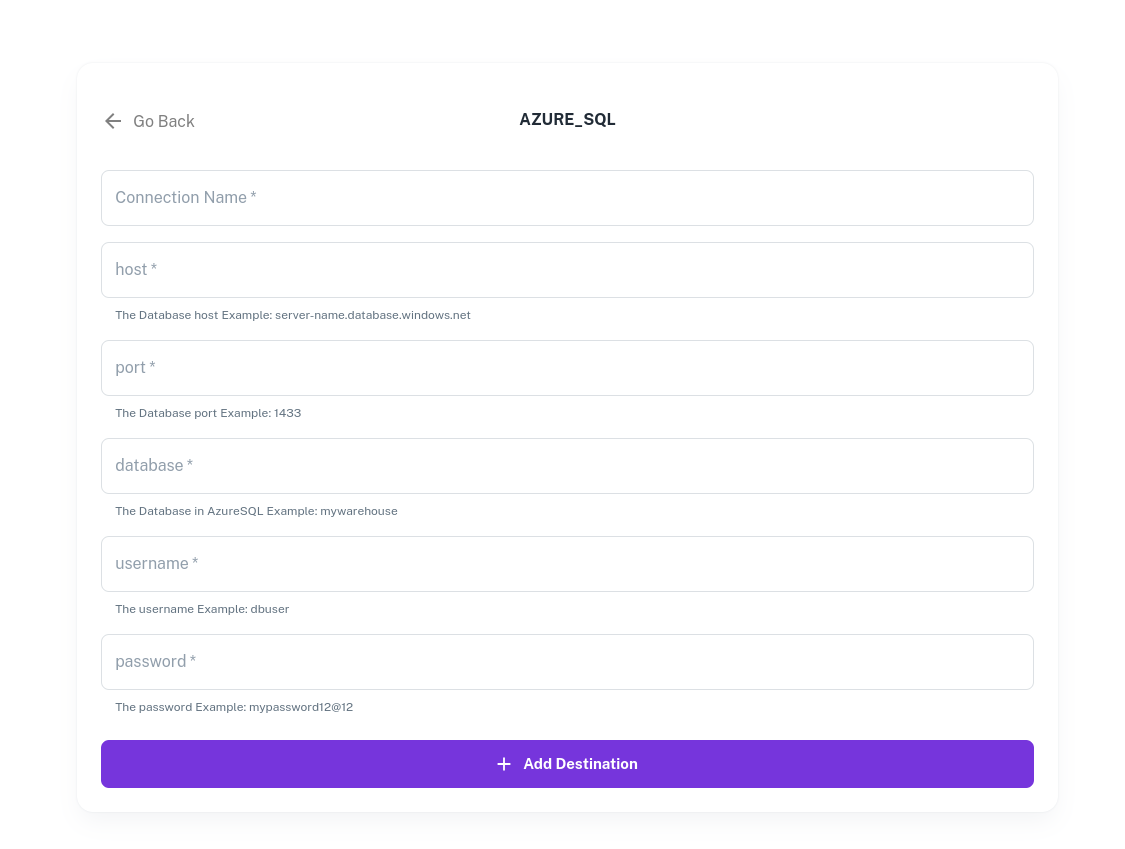

Navigate to Destinations Tab in ELT Data and click on "+ Add New Destination" button and select "Azure SQL".

Fill and submit the form.

You can keep your reporting DB private and deny access to all the public IPs. Since the pipeline execution happens within your cloud, the connection between pipeline code and DB can take place over a private network.

The credentials are encrypted and stored in ELT Data. ELT Data doesn't verify the credentials at this stage. The credentials are verified only when the pipelines run in customers' environment.

Data Policies and Privacy

Since the data is stored in customers' cloud environment, the data can be governed and audited using customers' data policies.

The data never leaves the customers' environment.